Introduction

"TL;DR

- A library for managing LLM API rate limits in distributed environments (K8s, Lambda, etc.)

- Uses Redis to share state across multiple processes

- Simply

pip install llmratelimiterand useawait limiter.acquire(tokens=5000)

When using LLM (Large Language Model) APIs, rate limiting is unavoidable. All LLM providers including OpenAI, Anthropic, and Google Vertex AI impose TPM (Tokens Per Minute) and RPM (Requests Per Minute) limits.

This becomes particularly problematic in scenarios like:

- Multiple worker processes calling the API simultaneously

- Sharing APIs across microservices

- Sending large volumes of requests in batch processing

- Running in serverless environments like AWS Lambda or Cloud Functions

In serverless environments, each function instance runs independently, making in-memory rate limiting ineffective. You need distributed rate limiting with external shared storage (Redis).

This article introduces LLMRateLimiter, a library developed to solve these problems.

Development Background

This library was born from a project that simultaneously processed tens of thousands of LLM API calls using Celery in a Kubernetes environment.

The Challenge:

- Dozens of Celery Workers calling LLM APIs in parallel

- Each Worker has no knowledge of other Workers' state

- Simply sending requests immediately hits rate limits

- A storm of

429 Too Many Requestserrors

The Solution:

- Distributed rate limiting using Redis to coordinate across all Workers

- FIFO queue for fair ordering

- Atomic operations via Lua scripts to prevent race conditions

What is LLMRateLimiter?

LLMRateLimiter is a distributed rate limiting library using Redis. Its FIFO queue-based algorithm controls API requests fairly and efficiently.

Key Features

| Feature | Description |

|---|---|

| FIFO Queue | First-come-first-served processing prevents thundering herd |

| Distributed Support | Redis backend shares state across processes/servers |

| Flexible Limits | Supports Combined TPM, Split TPM, and Mixed modes |

| Auto Retry | Exponential backoff with jitter for Redis failures |

| Graceful Degradation | Allows requests to pass when Redis is unavailable |

Installation

pip install llmratelimiter

Or using uv:

uv add llmratelimiter

Basic Usage

Simple Example

from llmratelimiter import RateLimiter

# Just specify Redis URL and limits

limiter = RateLimiter(

"redis://localhost:6379",

"gpt-4",

tpm=100_000, # 100K tokens/minute

rpm=100 # 100 requests/minute

)

# Acquire capacity before calling the API

await limiter.acquire(tokens=5000)

response = await openai.chat.completions.create(...)

Using an Existing Redis Client

from redis.asyncio import Redis

from llmratelimiter import RateLimiter

redis = Redis(host="localhost", port=6379)

limiter = RateLimiter(redis=redis, model="gpt-4", tpm=100_000, rpm=100)

SSL Connection

# Use rediss:// scheme for SSL connection

limiter = RateLimiter("rediss://localhost:6379", "gpt-4", tpm=100_000)

Rate Limiting Modes

LLMRateLimiter supports three modes:

| Mode | Use Case | Configuration |

|---|---|---|

| Combined | OpenAI, Anthropic | tpm=100000 |

| Split | GCP Vertex AI | input_tpm=..., output_tpm=... |

| Mixed | Custom | Both settings |

Combined Mode

For providers like OpenAI and Anthropic with a single TPM limit combining input and output:

limiter = RateLimiter(

"redis://localhost:6379", "gpt-4",

tpm=100_000, # Combined TPM limit

rpm=100

)

await limiter.acquire(tokens=5000)

Split Mode

For providers like GCP Vertex AI with separate limits for input and output:

limiter = RateLimiter(

"redis://localhost:6379", "gemini-1.5-pro",

input_tpm=4_000_000, # Input: 4M tokens/minute

output_tpm=128_000, # Output: 128K tokens/minute

rpm=360

)

# Specify input and output token counts

await limiter.acquire(input_tokens=5000, output_tokens=2048)

"Tip

Output token count needs to be estimated in advance. Using the

max_tokensparameter value is common practice.

Mixed Mode

Use both combined and split limits simultaneously:

limiter = RateLimiter(

"redis://localhost:6379", "custom-model",

tpm=500_000, # Combined limit

input_tpm=4_000_000, # Input limit

output_tpm=128_000, # Output limit

rpm=360

)

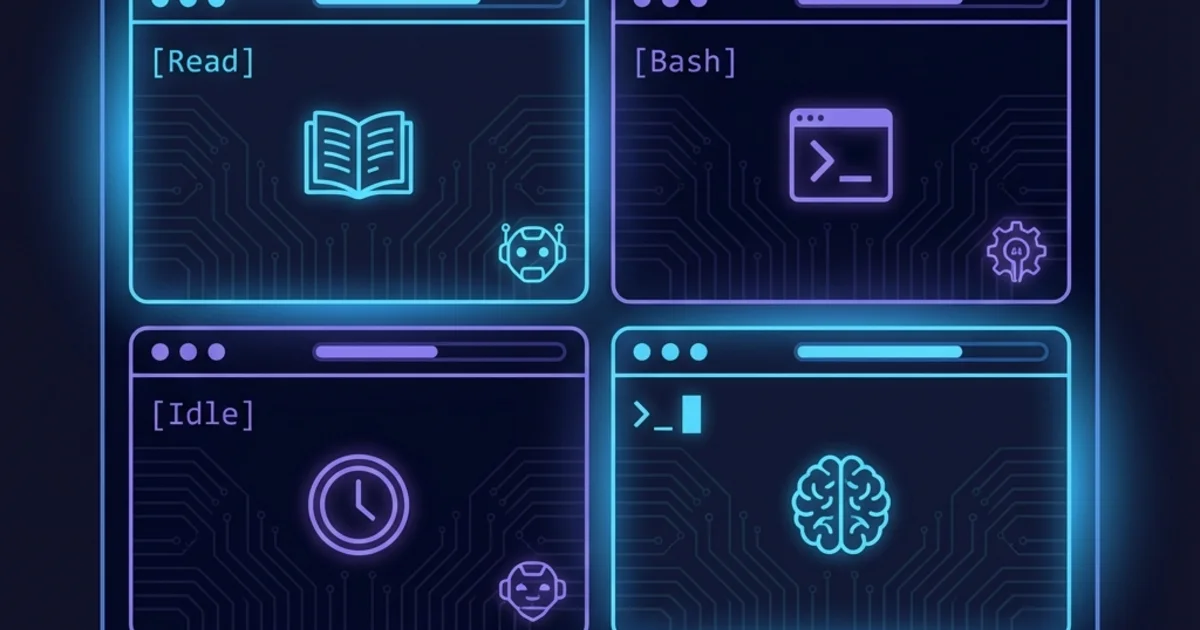

Architecture

Overall Structure

Multiple application instances share state through Redis to collectively respect rate limits.

Request Flow

When acquire() is called, the following operations execute atomically on Redis:

Key Points of Atomic Operations via Lua Scripts:

- Delete Expired Records: Clean up old records outside the window

- Aggregation: Calculate token and request counts within the current window

- Limit Check: Compare against TPM/RPM limits

- Slot Allocation: Immediate if within limits, calculate when capacity becomes available if exceeded

- Record Storage: Save consumption record to Redis Sorted Set

Why Lua Scripts Are Necessary

The biggest challenge when implementing rate limiting in distributed environments is race conditions.

The Race Condition Problem

A naive Python implementation creates race conditions like this:

# This is dangerous: race condition occurs

async def acquire_naive(redis, tokens):

current = await redis.get("token_count") # (1) Read

if current + tokens <= limit: # (2) Check

await redis.set("token_count", current + tokens) # (3) Write

return True

return False

When Worker 1 and 2 GET simultaneously, both determine "2,000 tokens remaining" and try to consume 3,000 tokens each. Result: limit exceeded.

Solution with Lua Scripts

Lua scripts execute atomically on the Redis server. Other commands are blocked during script execution, preventing race conditions.

Sliding Window

Rate limiting is managed with a 60-second (configurable) sliding window:

Connection Management and Retry

RedisConnectionManager

For production environments, use RedisConnectionManager with connection pooling and retry functionality:

from llmratelimiter import RateLimiter, RedisConnectionManager, RetryConfig

manager = RedisConnectionManager(

"redis://localhost:6379",

max_connections=20,

retry_config=RetryConfig(

max_retries=5,

base_delay=0.1,

max_delay=10.0,

exponential_base=2.0,

jitter=0.1

)

)

limiter = RateLimiter(manager, "gpt-4", tpm=100_000, rpm=100)

Exponential Backoff

When Redis operations fail, retry with exponential backoff:

Graceful Degradation

When Redis is unavailable, requests pass through instead of being blocked:

# Even if Redis is down, this returns immediately

result = await limiter.acquire(tokens=5000)

# If wait_time=0, queue_position=0,

# the request may have passed without rate limiting

Monitoring

Use get_status() to check current usage:

status = await limiter.get_status()

print(f"Model: {status.model}")

print(f"Token Usage: {status.tokens_used}/{status.tokens_limit}")

print(f"Input Tokens: {status.input_tokens_used}/{status.input_tokens_limit}")

print(f"Output Tokens: {status.output_tokens_used}/{status.output_tokens_limit}")

print(f"Requests: {status.requests_used}/{status.requests_limit}")

print(f"Queue Depth: {status.queue_depth}")

Best Practices

1. Accurate Token Estimation

When using Split Mode, estimating output token count is important:

# Use max_tokens parameter for estimation

estimated_output = min(request_max_tokens, 4096)

result = await limiter.acquire(

input_tokens=len(prompt_tokens),

output_tokens=estimated_output

)

2. Use Context Managers

Ensure connections are properly cleaned up:

async with RedisConnectionManager("redis://localhost:6379") as manager:

limiter = RateLimiter(manager, "gpt-4", tpm=100_000, rpm=100)

await limiter.acquire(tokens=5000)

# Connection pool automatically closed

3. Use Separate Limiters Per Model

gpt4_limiter = RateLimiter(manager, "gpt-4", tpm=100_000, rpm=100)

gpt35_limiter = RateLimiter(manager, "gpt-3.5-turbo", tpm=1_000_000, rpm=500)

4. Utilize Burst Allowance

When tolerating temporary spikes:

limiter = RateLimiter(

"redis://localhost:6379", "gpt-4",

tpm=100_000,

rpm=100,

burst_multiplier=1.5 # Allow 50% burst

)

Summary

LLMRateLimiter is a library for efficiently managing LLM API rate limits in distributed environments.

Key Benefits:

- Simple API: Just pass a Redis URL to get started

- Distributed Support: Share state across multiple processes/servers

- Flexibility: Supports various LLM provider limit models

- Reliability: Resilient to failures with retry and graceful degradation

Links:

- GitHub: https://github.com/Ameyanagi/LLMRateLimiter

- Documentation: https://Ameyanagi.github.io/LLMRateLimiter/

- PyPI: https://pypi.org/project/LLMRateLimiter/

Give it a try! Feedback and contributions are welcome.